openai burns the boats

the $334 machine that openai is aiming at anthropic

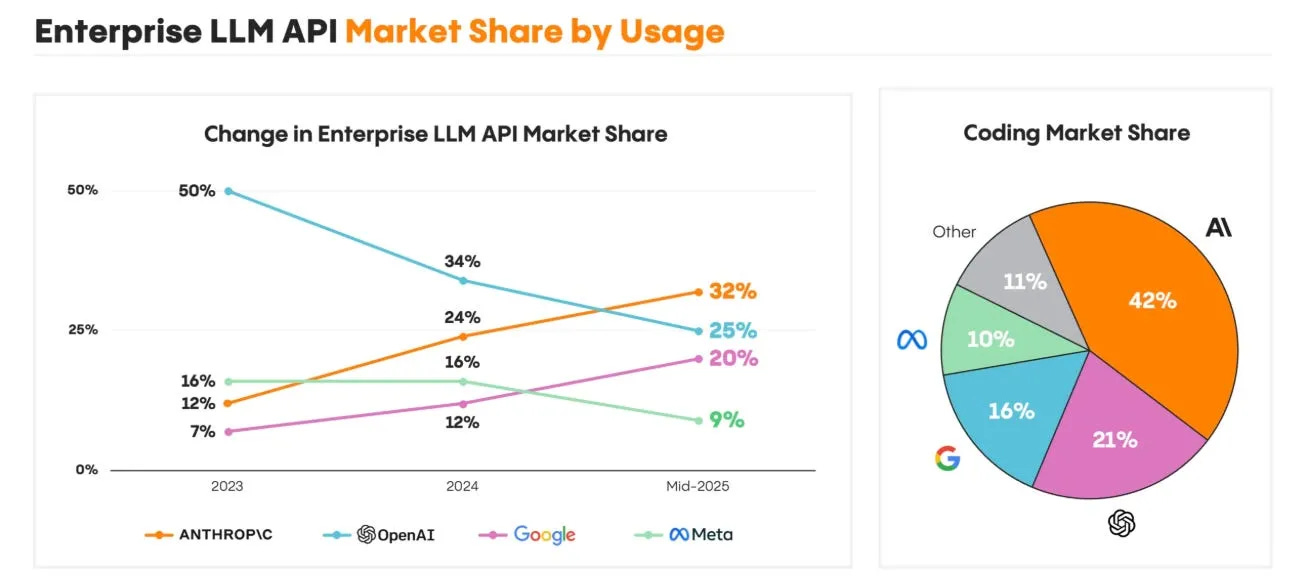

by mid-2025, anthropic had done the impossible. they'd taken 32% of the enterprise llm api market from openai. up from 12% just eighteen months earlier. inference was profitable for anthropic. not "path to profitability." not "unit economics positive." actually profitable, according to an interview with their ceo

now if this is true for anthropic, this could also be true of openai — making a world where training models and providing inference, could see ec2-like margins over the long term

then last week, openai dropped gpt-5 at $10 per million tokens

at almost 10x cheaper than anthropic’s flagship and on par in performance benchmark, it’s openai’s declaration of its willingness to destroy the margins in “training and delivering” inference.

so, why?

to understand this, we need to first understand why disneyland makes parking free

why would you set your own house on fire?

recommended reading:

Joel Spolsky: https://www.joelonsoftware.com/2002/06/12/strategy-letter-v/

Gwern: https://gwern.net/complement

joel spolsky wrote about this in 2002. gwern expanded on it. but the principle is older than software: commodotize your complements

pick one thing that gives your business profit margins, and focus on making profit on that one thing, then make everything that is consumed alongside your main thing [complements for the economics majors] commodotized:

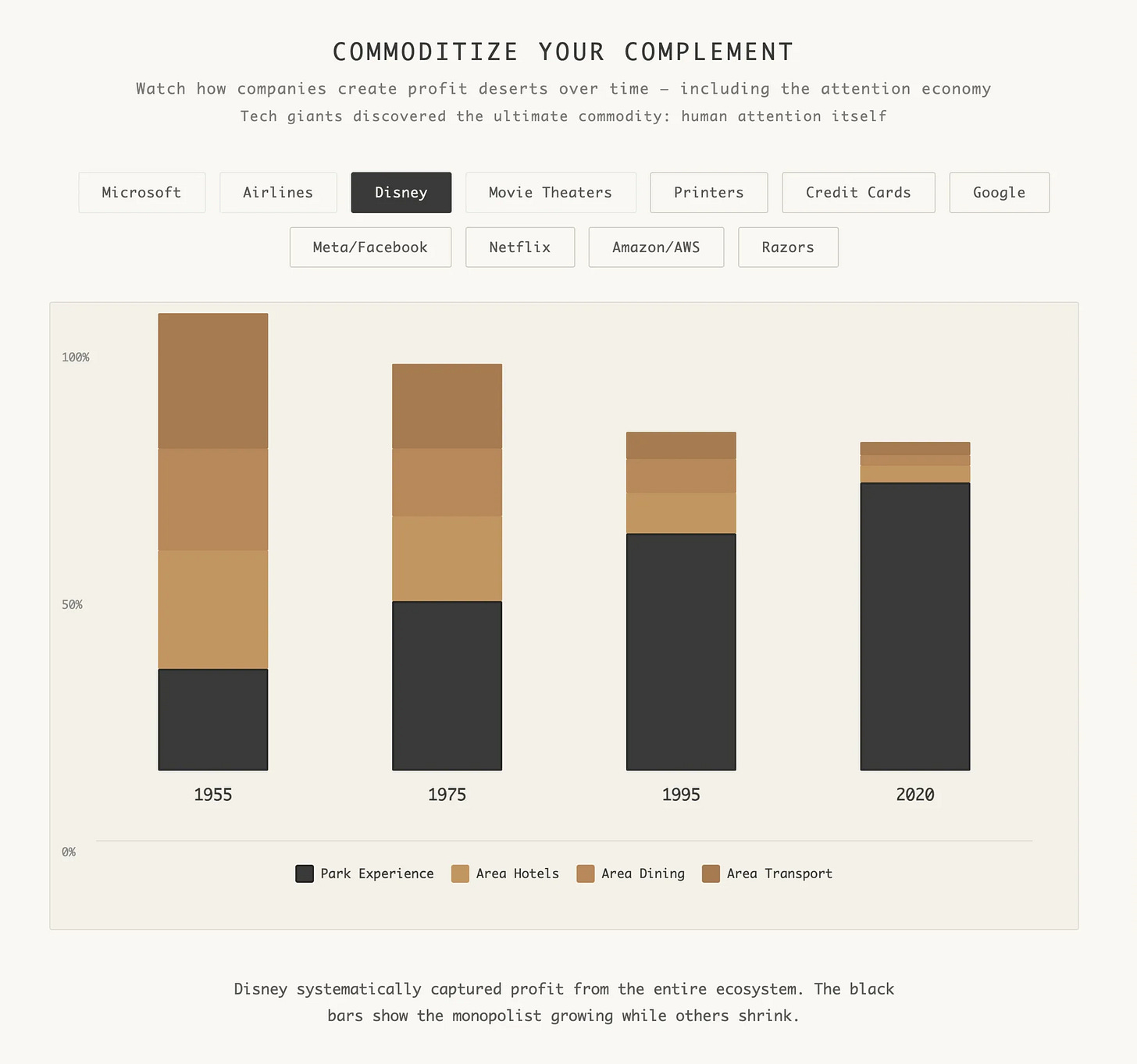

disney makes marigns on in-park purchases, so they make the hotels, parking, and flights cheaper [commodity prices]

casinos make money on gambling, so they make the hotel room, the food, and the drinks cheaper [commodity prices]

google makes money on ads they serve when you search: the more expensive it is to search, the less people search

so google builds chrome, and gives it away for free

every free chrome download is another funnel into google’s $207 billion advertising machine

facebook took this one step further, and thought “the more expensive phones and wifi is, the fewer people are on facebook”

so facebook paid phone manufacturers to preinstall their app. literally handed samsung bags of cash to put a facebook icon on the home screen

and then subsidized those phones to be distributed across africa with wifi

cheaper wifi, cheaper phones = more users = more ads

microsoft did it first with pcs and ms-dos. they wanted to sell software, so they made hardware a commodity. they didn't build pcs - they made pcs so cheap to build that compaq and dell fought each other to the death while microsoft collected windows licenses from every corpse.

so businesses in tech will pick a profit center w/ fat margins and burn everything else around it to the ground. make your complements free. make them commodities. make them so cheap that nobody else can make money on them.

the four kingdoms of profit

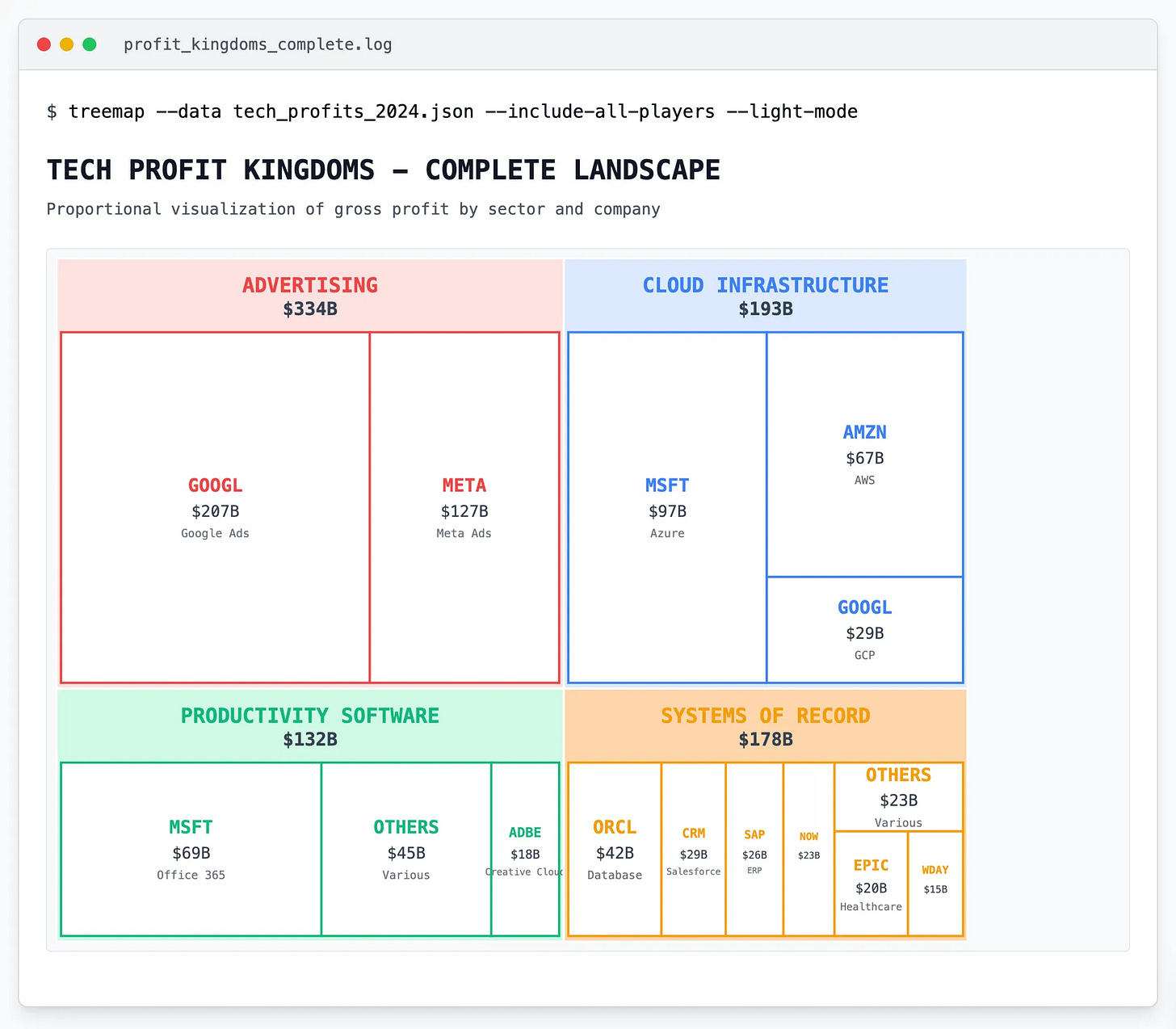

so where can you make profit if you’re a promising AGI lab? here are the 4 places where all of tech’s profit lives:

advertising: $334 billion. google ads alone does $207 billion. meta adds another $127 billion. together they make more profit than most countries' gdp.

cloud: $193 billion. aws ($67b), azure ($97b), gcp ($29b). they sell boring infrastructure at criminal margins.

systems of record: $132 billion. salesforce. oracle. sap. the databases that run the world and that nobody understands.

productivity: $132 billion. microsoft office. adobe creative suite. the stuff you hate using but can't live without.

you pick one kingdom. you defend it with your life. you burn everything else to defend it.

so what was the api inference market?

inference was supposed to be the next aws

ai inference fits neatly into the cloud computing profit bucket. it's a value-added service (the model weights) that you package with gpus to sell to buyers at a nice markup. same playbook as snowflake adding sql on top of ec2, or dropbox adding sync on top of s3.

think about what aws actually is. amazon takes commodity hardware that you could buy yourself, wraps it in apis, and charges a 70% markup for not having to think about it. ec2 instances are just servers. s3 is just hard drives. but bezos figured out that enterprises would pay obscene margins for someone else to manage the complexity.

inference should work the same way. take commodity gpus, add your proprietary models, wrap it in enterprise features, charge for peace of mind.

except it's harder to create a new profit center than people think, the neo-clouds learned this the hard way. together.ai and fireworks run low double digit margins. modal marks up sandboxes by 10%.. aws, gcp, and azure all offer inference basically at cost.

but anthropic was making real headway

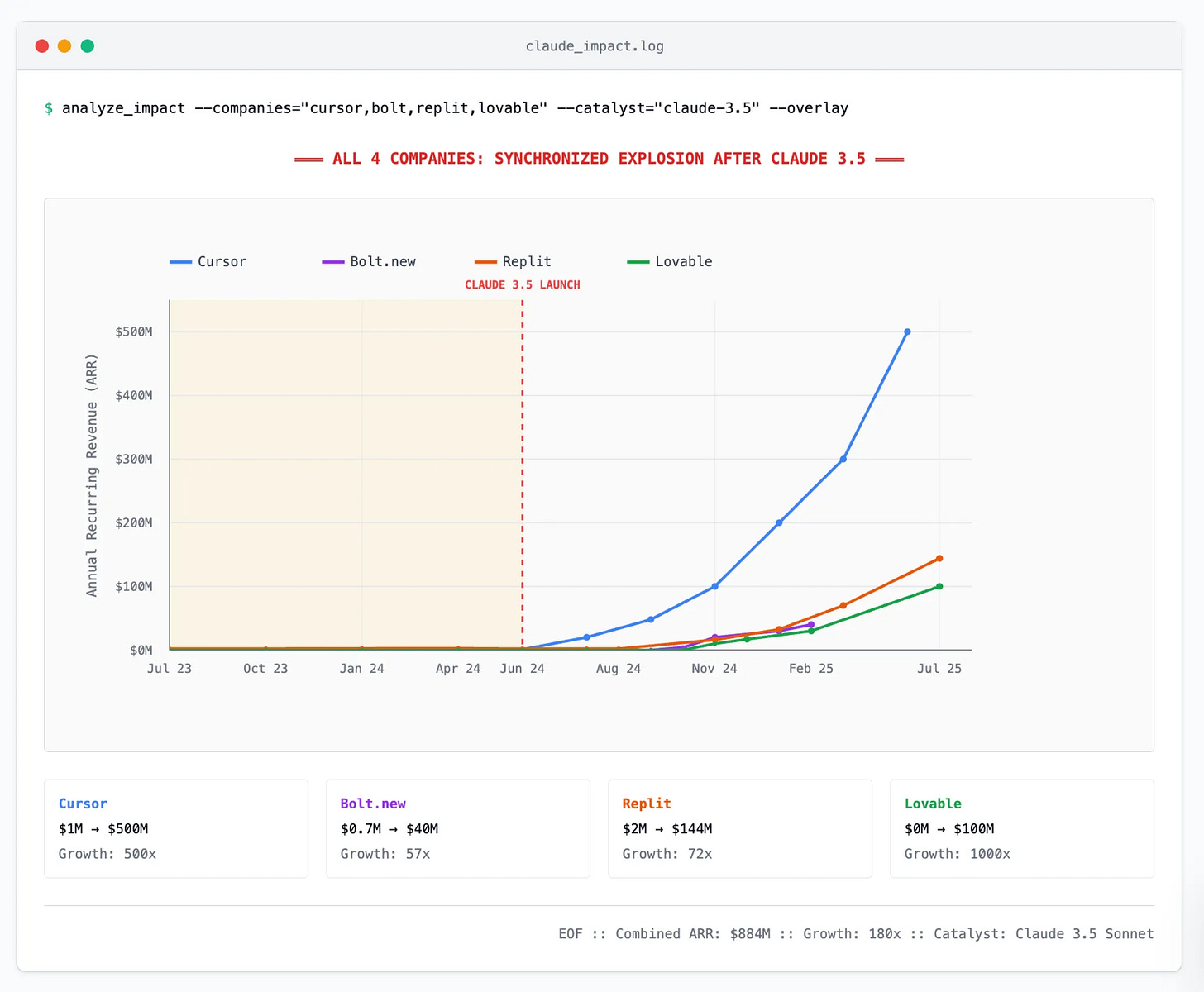

look at that chart. anthropic almost 3x’d market share while openai fell from 50%.

they did it while keeping prices flat: $75/million for opus, $15/million for sonnet across versions 3.0, 3.5, 3.7, and 4.0.

how? they dominated coding and had a consistently better model for almost 2 years.

claude 3.5 was the breakthrough that spawned an entire ecosystem - cursor and windsurf for code editors, lovable and bolt for app builders

superior function calling that actually worked in production

five-nines uptime through bedrock and vertex partnerships

no consumer drama (reps could ask: "you want the same ai making ghibli cartoons running your trading desk?")

the coding dominance was total. every major coding tool - cursor, windsurf, lovable, bolt, replit - runs on claude. anthropic owns 42% of the coding assistant market. twice their nearest competitor — which gave them pricing power and forced their ecosystem to get used to their price point

the customers followed: bridgewater's analysts, gitlab's engineers, financial firms deploying through deloitte and pwc. although not everyone was happy about it — companies like replit, lovable and cursor had originally designed their business model around the expectation that frontier tokens would get cheaper, but as it turned out, tokens were getting more expensive

dario recently told alex kantrowitz the quiet part out loud: "we make improvements all the time that make the models, like, 50% more efficient than they are before. we are just the beginning of optimizing inference... for every dollar the model makes, it costs a certain amount. that is actually already fairly profitable."

translation: despite flat pricing and winning market share, they've turned inference into a profit center. the coding capability edge meant the entire surge in ai coding happened on anthropic's infrastructure.

eighteen months ago, openai owned this market. but they didn't focus on coding superiority or enterprise reliability. anthropic did the boring stuff and built the aws of ai.

openai strikes back

openai's response was to set the entire business model on fire and move to a different arena.

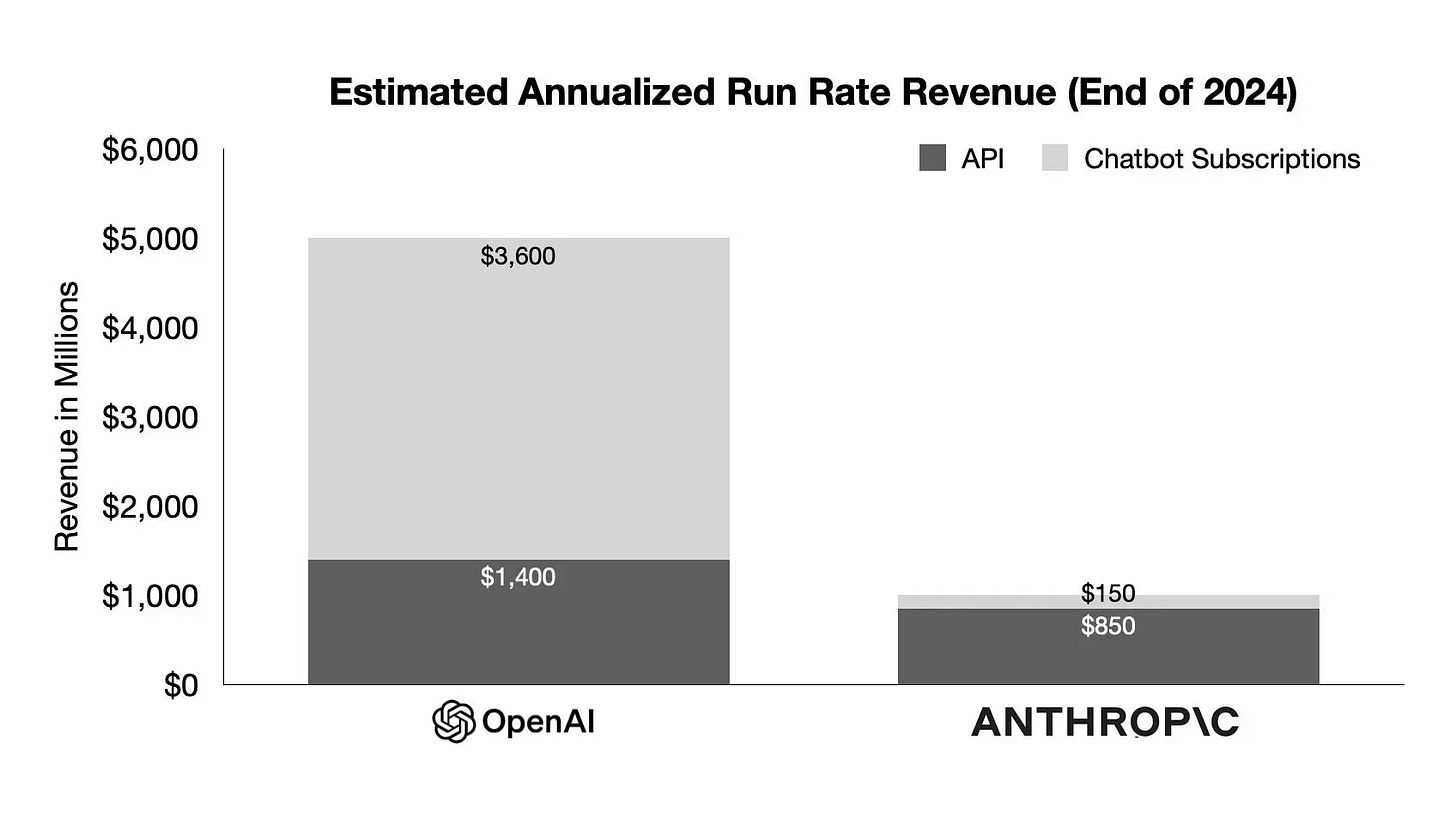

openai was never just an api company. $3.6 billion in consumer subscriptions versus $1.4 billion in api revenue. they make more money from consumers paying $20/month than from enterprises paying millions. and there's only one way consumer businesses make real money: advertising.

remember those four kingdoms of profit? advertising is the monster - $334 billion in pure profit, not revenue. profit. and that monster is hungry. it wants everything free. it wants browsers free (that's why google built chrome). it wants phones free (that's why facebook subsidized manufacturers). anything that stands between users and spending more time staring at screens? the monster wants it dead. and now the monster wants tokens free. google and meta need this too - they'll subsidize inference to infinity to protect their $207 billion and $127 billion advertising empires. every dollar of ad profit creates more pressure to commoditize anthropic's business model.

their hiring decisions reflect where they're going. in december they hired kate rouch as their first cmo - the same person who ran ads at meta for 11 years and put ads in instagram's feed. in may they brought in fidji simo, instacart's ceo, as head of applications. you don't hire the architect of facebook's ad machine to optimize subscription pricing. you hire them to summon the monster. openai doesn't need subscription revenue. they need to become the default place people go to think, to search, to create.

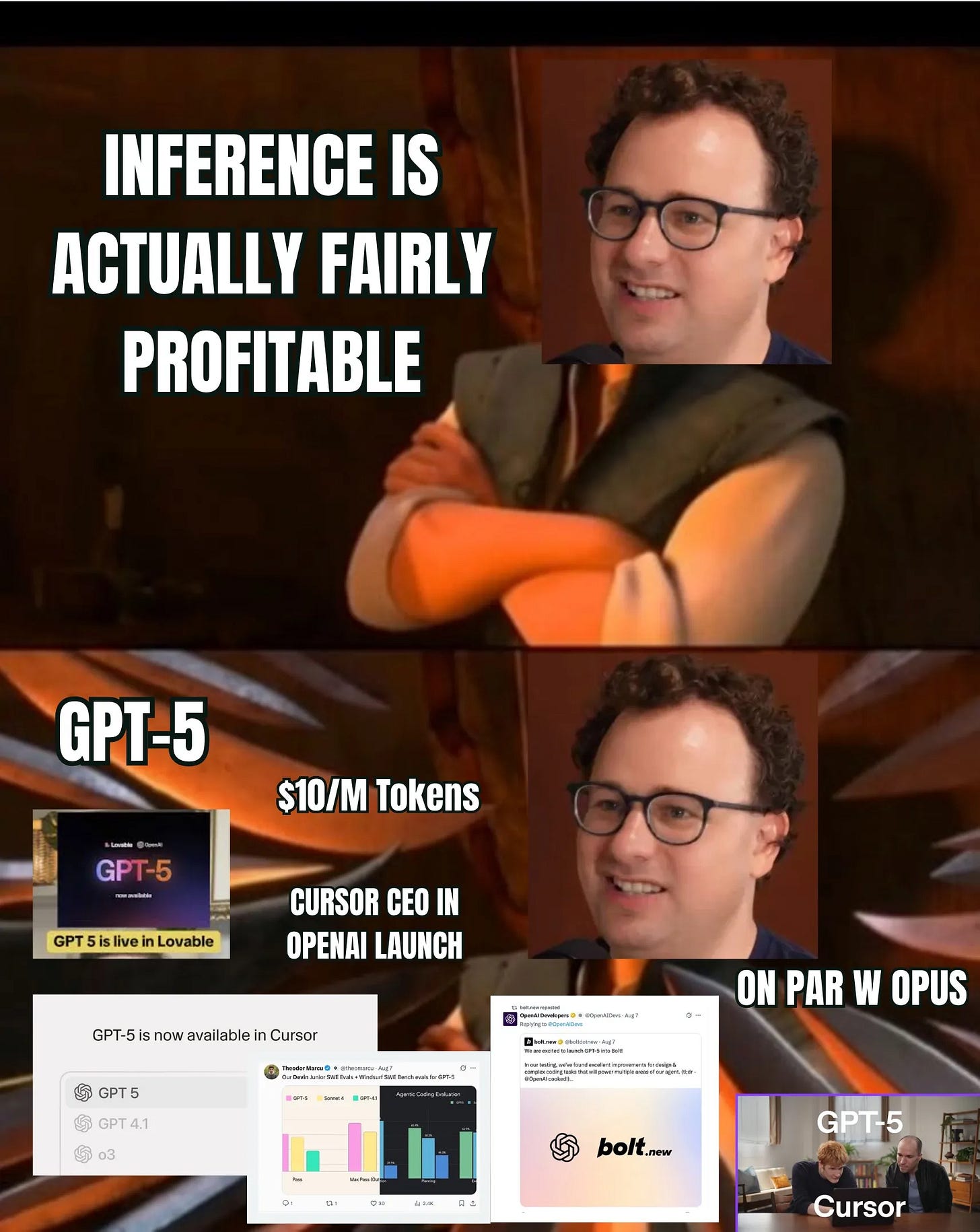

so they torched their api business on the way out. by releasing GPT-5, a model with the same performance benchmarks as claude on coding at a cost of $10 per million. every single application layer company that was previosuly dependent at the mercy of anthropic’s price point switched overnight: cursor, lovable, bolt, devin, windsurf were all launch partners with openai’s announcement.

openai didn’t need to do this: they could have still charged a decent margin in api and used the anthropic new price standard of 15/75 to make some extra money, while marching into the advertising space, but they are choosing to bring this cost down below the market rate that anthropic has set, in a move that really only signals their willingness to prevent this market from existing

anthropic island

anthropic is now the only relevant agi lab trying to capture margins on training and providing models. everyone else went open source (meta's llama) or aligned with advertising (google, openai).

the neo-clouds - together, modal, replicate - are frenemies at best. they run 10-30% markups on gpu resale. every dollar of margin anthropic captures is a dollar they don't get. but every dollar openai discounts increases volume, which makes them happy. they want throughput, not anthropic's pricing power. even aws, their theoretical partner, treats inference as a gateway drug to lock enterprises into their $67 billion infrastructure ecosystem.

anthropic has cards to play though. dario's mentioned that rl, interpretability, fine-tuning - these are all things they might still charge real margins for. their enterprise contracts are sticky. if you know coupa or sap, you know enterprises aren't as cost-sensitive as consumers. they'll pay for reliability, compliance, features that actually matter to fortune 500s.

they could double down on application layer margins too. claude code is starting to look more like cursor or devin. claude desktop is making artifacts hostable, which puts them in competition with lovable and bolt. they could even enter the system of record or productivity realms one day. move up the stack to where the real $132 billion profit pools live.

but here's what this divergence really means: it won't be existential for openai to be competitive in the api business anymore, but it'll be existential for anthropic. openai can let the api business die - they have consumer subscriptions and advertising to chase. anthropic needs those api margins to survive. we're watching these agi labs' paths diverge in real time. openai is willing to burn inference margins forever if they're moving to ads. anthropic is trying to build a sustainable business model while openai is burning the entire category to capture something bigger.

the fundamental dynamic has shifted. openai chose advertising - the $334 billion kingdom. anthropic chose infrastructure - the thing everyone else gives away to sell something better. burning inference margins was a declaration of war, not against anthropic, but against google. against the old world. against anyone who thinks ai is about infrastructure instead of attention.

thank you to matteo carraba, mark hay, andy jiang, melek somai, nikhil sachdev, shawn wang, george kunthara, and jeffrey wang for reading drafts of this

Do you still run a company or is it just banger factory now

I stopped reading when you said "disney makes marigns on in-park purchases, so they make the hotels, parking, and flights cheaper" have you been to disney? Parking is $35. The hotels are the most expensive in the area, Grand Californian is like 1k per night or something insane. Disney charges a premium on in-park purchases and charges a premium on hotels and parking and I'm certain they would charge a premium on air line tickets if they owned one.